NVIDIA says its new H100 datacenter GPU is up to six times faster than its last

Partway through last year, NVIDIA announced , its first-ever datacenter CPU. At the time, the company only shared a few tidbits of information about the chip, noting, for instance, it would utilize its to provide data transfer speeds of up to 900 GB/s between components. Fast forward to the 2022 GPU Technology Conference, which kicked off on Tuesday morning. At the event, CEO Jensen Huang unveiled the Grace CPU Superchip, the first discrete CPU NVIDIA plans to release as part of its Grace lineup.

Built on ARM’s recently introduced , the Grace CPU Superchip is actually two Grace CPUs connected via the company’s aforementioned NVLink interconnect technology. It integrates a staggering 144 ARM cores into a single socket and consumes approximately 500 watts of power. Ultra-fast LPDDR5x memory built into the chip allows for bandwidth speeds of up to 1 terabyte per second.

While they’re very different chips, a useful way to conceptualize NVIDIA’s new silicon is to think of Apple’s recently announced . In the most simple terms, the M1 Ultra is made up of two M1 Max chips connected via Apple’s aptly named UltraFusion technology.

When NVIDIA begins shipping the Grace CPU Superchip to clients like the Department of Energy in the first half of 2023, it will offer them the option to configure it either as a standalone CPU system or as part of a server with up to eight Hopper-based GPUs (more on those in just a moment). The company claims its new chip is twice as fast as traditional servers. NVIDIA estimates it will achieve a score of approximately 740 points in SPECrate®2017_int_base benchmarks, putting it in the upper echelon data center processors.

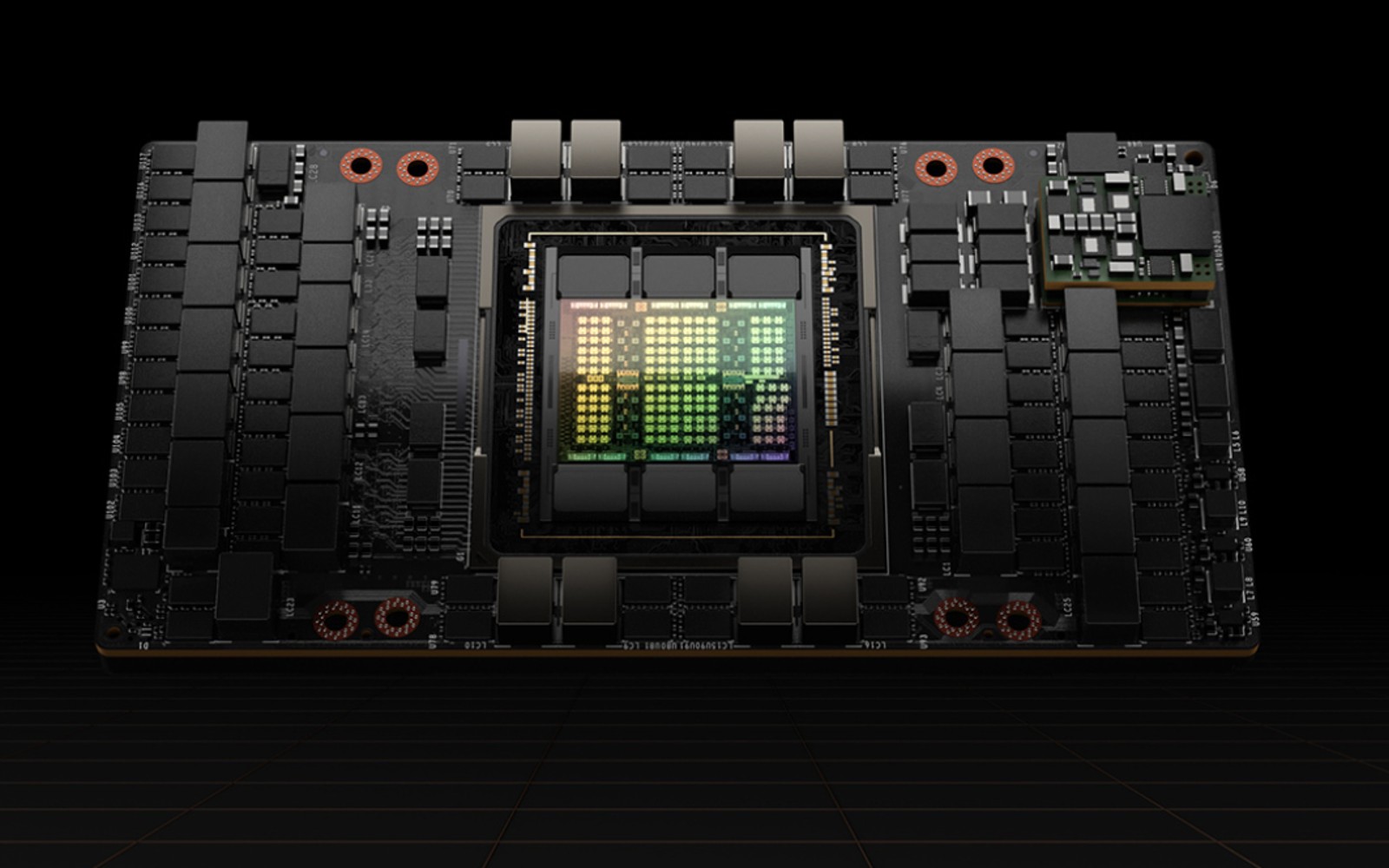

Alongside the Grace CPU Superchip, NVIDIA announced its highly anticipated . Named after pioneering computer scientist , it is the successor to the company’s current (you know, the one that powers all of the company’s impossible-to-find RTX 30 series GPUs). Now before you get excited, know that NVIDIA didn’t announce any mainstream GPUs at GTC. Instead, we got to see the . It’s an 80 billion transistor behemoth built using TSMC’s cutting-edge 4nm process. At the heart of the H100 is NVIDIA’s new Transformer Engine, which the company claims allows it to offer unparalleled performance when it needs to compute transformer models. Over the past few years, transformer models have become widely popular with AI scientists working with systems like GPT-3 and AlphaFold. NVIDIA claims the H100 can reduce the time it takes to train large models down to days and even mere hours. The H100 will be available later this year.

All products recommended by Engadget are selected by our editorial team, independent of our parent company. Some of our stories include affiliate links. If you buy something through one of these links, we may earn an affiliate commission.