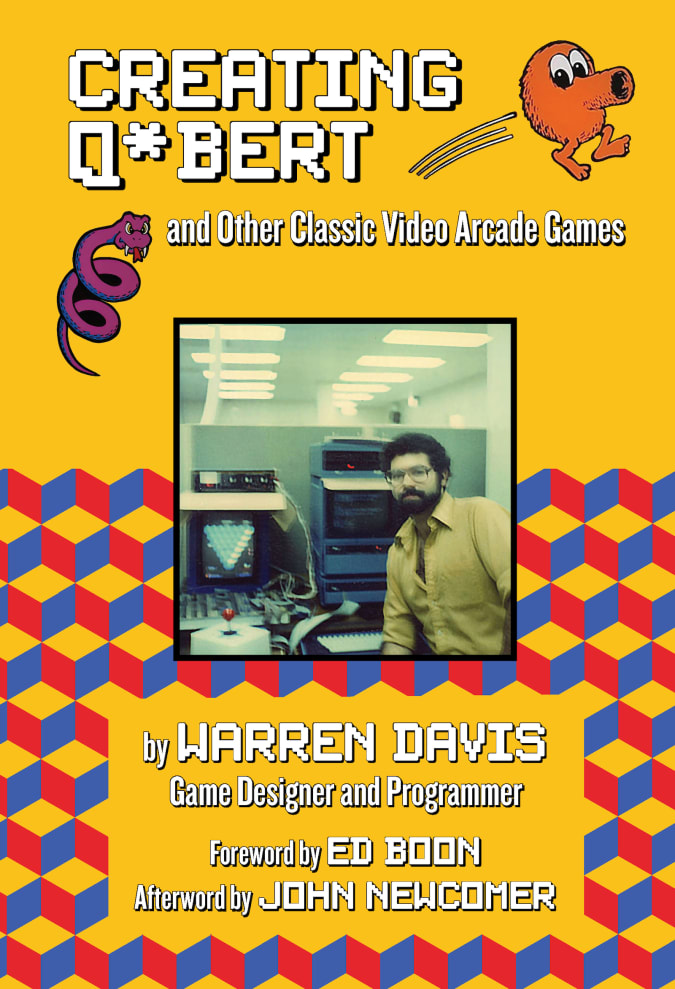

Hitting the Books: Amiga and the birth of 256-color gaming

With modern consoles offering gamers graphics so photorealistic that they blur the line between CGI and reality, it’s easy forget just how cartoonishly blocky they were in the 8-bit era. In his new book, Creating Q*Bert and Other Classic Arcade Games, legendary game designer and programmer Warren Davis recalls his halcyon days imagining and designing some of the biggest hits to ever grace an arcade. In the excerpt below, Davis explains how the industry made its technological leap from 8- to 12-bit graphics.

Santa Monica Press

©2021 Santa Monica Press

Back at my regular day job, I became particularly fascinated with a new product that came out for the Amiga computer: a video digitizer made by a company called A-Squared. Let’s unpack all that slowly.

The Amiga was a recently released home computer capable of unprecedented graphics and sound: 4,096 colors! Eight-bit stereo sound! There were image manipulation programs for it that could do things no other computer, including the IBM PC, could do. We had one at Williams not only because of its capabilities, but also because our own Jack Haeger, an immensely talented artist who’d worked on Sinistar at Williams a few years earlier, was also the art director for the Amiga design team.

Video digitization is the process of grabbing a video image from some video source, like a camera or a videotape, and converting it into pixel data that a computer system (or video game) could use. A full-color photograph might contain millions of colors, many just subtly different from one another. Even though the Amiga could only display 4,096 colors, that was enough to see an image on its monitor that looked almost perfectly photographic.

Our video game system still could only display 16 colors total. At that level, photographic images were just not possible. But we (and by that I mean everyone working in the video game industry) knew that would change. As memory became cheaper and processors faster, we knew that 256-color systems would soon be possible. In fact, when I started looking into digitized video, our hardware designer, Mark Loffredo, was already playing around with ideas for a new 256-color hardware system.

Let’s talk about color resolution for a second. Come on, you know you want to. No worries if you don’t, though, you can skip these next few paragraphs if you like. Color resolution is the number of colors a computer system is capable of displaying. And it’s all tied in to memory. For example, our video game system could display 16 colors. But artists weren’t locked into 16 specific colors. The hardware used a “palette.” Artists could choose from a fairly wide range of colors, but only 16 of them could be saved in the palette at any given time. Those colors could be programmed to change while a game was running. In fact, changing colors in a palette dynamically allowed for a common technique used in old video games called “color cycling.”

For the hardware to know what color to display at each pixel location, each pixel on the screen had to be identified as one of those 16 colors in the palette. The collection of memory that contained the color values for every pixel on the screen was called “screen memory.” Numerically, it takes 4 bits (half a byte) to represent 16 numbers (trust me on the math here), so if 4 bits = 1 pixel, then 1 byte of memory could hold 2 pixels. By contrast, if you wanted to be able to display 256 colors, it would take 8 bits to represent 256 numbers. That’s 1 byte (or 8 bits) per pixel.

So you’d need twice as much screen memory to display 256 colors as you would to display 16. Memory wasn’t cheap, though, and game manufacturers wanted to keep costs down as much as possible. So memory prices had to drop before management approved doubling the screen memory.

Today we take for granted color resolutions of 24 bits per pixel (which potentially allows up to 16,777,216 colors and true photographic quality). But back then, 256 colors seemed like such a luxury. Even though it didn’t approach the 4,096 colors of the Amiga, I was convinced that such a system could result in close to photo-realistic images. And the idea of having movie-quality images in a video game was very exciting to me, so I pitched to management the advantages of getting a head start on this technology. They agreed and bought the digitizer for me to play around with.

The Amiga’s digitizer was crude. Very crude. It came with a piece of hardware that plugged into the Amiga on one end, and to the video output of a black-and-white surveillance camera (sold separately) on the other. The camera needed to be mounted on a tripod so it didn’t move. You pointed it at something (that also couldn’t move), and put a color wheel between the camera and the subject. The color wheel was a circular piece of plastic divided into quarters with different tints: red, green, blue, and clear.

When you started the digitizing process, a motor turned the color wheel very slowly, and in about thirty to forty seconds you had a full-color digitized image of your subject. “Full-color” on the Amiga meant 4 bits of red, green, and blue—or 12-bit color, resulting in a total of 4,096 colors possible.

It’s hard to believe just how exciting this was! At that time, it was like something from science fiction. And the coolness of it wasn’t so much how it worked (because it was pretty damn clunky) but the potential that was there. The Amiga digitizer wasn’t practical—the camera and subject needed to be still for so long, and the time it took to grab each image made the process mind-numbingly slow—but just having the ability to produce 12-bit images at all enabled me to start exploring algorithms for color reduction.

Color reduction is the process of taking an image with a lot of colors (say, up to the 16,777,216 possible colors in a 24-bit image) and finding a smaller number of colors (say, 256) to best represent that image. If you could do that, then those 256 colors would form a palette, and every pixel in the image would be represented by a number—an “index” that pointed to one of the colors in that palette. As I mentioned earlier, with a palette of 256 colors, each index could fit into a single byte.

But I needed an algorithm to figure out how to pick the best 256 colors out of the thousands that might be present in a digitized image. Since there was no internet back then, I went to libraries and began combing through academic journals and technical magazines, searching for research done in this area. Eventually, I found some! There were numerous papers written on the subject, each outlining a different approach, some easier to understand than others. Over the next few weeks, I implemented a few of these algorithms for generating 256 color palettes using test images from the Amiga digitizer. Some gave better results than others. Images that were inherently monochromatic looked the best, since many of the 256 colors could be allotted to different shades of a single color.

During this time, Loffredo was busy developing his 256-color hardware. His plan was to support multiple circuit boards, which could be inserted into slots as needed, much like a PC. A single board would give you one surface plane to draw on. A second board gave you two planes, foreground and background, and so on. With enough planes, and by having each plane scroll horizontally at a slightly different rate, you could give the illusion of depth in a side-scrolling game.

All was moving along smoothly until the day word came down that Eugene Jarvis had completed his MBA and was returning to Williams to head up the video department. This was big news! I think most people were pretty excited about this. I know I was, because despite our movement toward 256-color hardware, the video department was still without a strong leader at the helm. Eugene, given his already legendary status at Williams, was the perfect person to take the lead, partly because he had some strong ideas of where to take the department, and also due to management’s faith in him. Whereas anybody else would have to convince management to go along with an idea, Eugene pretty much had carte blanche in their eyes. Once he was back, he told management what we needed to do and they made sure he, and we, had the resources to do it.

This meant, however, that Loffredo’s planar hardware system was toast. Eugene had his own ideas, and everyone quickly jumped on board. He wanted to create a 256-color system based on a new CPU chip from Texas Instruments, the 34010 GSP (Graphics System Processor). The 34010 was revolutionary in that it included graphics-related features within its core. Normally, CPUs would have no direct connection to the graphics portion of the hardware, though there might be some co-processor to handle graphics chores (such as Williams’ proprietary VLSI blitter). But the 34010 had that capability on board, obviating the need for a graphics co-processor.

Looking at the 34010’s specs, however, revealed that the speed of its graphics functions, while well-suited for light graphics work such as spreadsheets and word processors, was certainly not fast enough for pushing pixels the way we needed. So Mark Loffredo went back to the drawing board to design a VLSI blitter chip for the new system.

Around this time, a new piece of hardware arrived in the marketplace that signaled the next generation of video digitizing. It was called the Image Capture Board (ICB), and it was developed by a group within AT&T called the EPICenter (which eventually split from AT&T and became Truevision). The ICB was one of three boards offered, the others being the VDA (Video Display Adapter, with no digitizing capability) and the Targa (which came in three different configurations: 8-bit, 16-bit, and 24-bit). The ICB came with a piece of software called TIPS that allowed you to digitize images and do some minor editing on them. All of these boards were designed to plug in to an internal slot on a PC running MS-DOS, the original text-based operating system for the IBM PC. (You may be wondering . . . where was Windows? Windows 1.0 was introduced in 1985, but it was terribly clunky and not widely used or accepted. Windows really didn’t achieve any kind of popularity until version 3.0, which arrived in 1990, a few years after the release of Truvision’s boards.)

A little bit of trivia: the TGA file format that’s still around today (though not as popular as it once was) was created by Truevision for the TARGA series of boards. The ICB was a huge leap forward from the Amiga digitizer in that you could use a color video camera (no more black-and-white camera or color wheel), and the time to grab a frame was drastically reduced—not quite instantaneous, as I recall, but only a second or two, rather than thirty or forty seconds. And it internally stored colors as 16-bits, rather than 12 like the Amiga. This meant 5 bits each of red, green, and blue—the same that our game hardware used—resulting in a true-color image of up to 32,768 colors, rather than 4,096. Palette reduction would still be a crucial step in the process. The greatest thing about the Truevision boards was they came with a Software Development Kit (SDK), which meant I could write my own software to control the board, tailoring it to my specific needs. This was truly amazing! Once again, I was so excited about the possibilities that my head was spinning.

I think it’s safe to say that most people making video games in those days thought about the future. We realized that the speed and memory limitations we were forced to work under were a temporary constraint. We realized that whether the video game industry was a fad or not, we were at the forefront of a new form of storytelling. Maybe this was a little more true for me because of my interest in filmmaking, or maybe not. But my experiences so far in the game industry fueled my imagination about what might come. And for me, the holy grail was interactive movies. The notion of telling a story in which the player was not a passive viewer but an active participant was extremely compelling. People were already experimenting with it under the constraints of current technology. Zork and the rest of Infocom’s text adventure games were probably the earliest examples, and more would follow with every improvement in technology. But what I didn’t know was if the technology needed to achieve my end goal—fully interactive movies with film-quality graphics—would ever be possible in my lifetime. I didn’t dwell on these visions of the future. They were just thoughts in my head. Yet, while it’s nice to dream, at some point you’ve got to come back down to earth. If you don’t take the one step in front of you, you can be sure you’ll never reach your ultimate destination, wherever that may be.

I dove into the task and began learning the specific capabilities of the board, as well as its limitations. With the first iteration of my software, which I dubbed WTARG (“W” for Williams, “TARG” for TARGA), you could grab a single image from either a live camera or a videotape. I added a few different palette reduction algorithms so you could try each and find the best palette for that image. More importantly, I added the ability to find the best palette for a group of images, since all the images of an animation needed to have a consistent look. There was no chroma key functionality in those early boards, so artists would have to erase the background manually. I added some tools to help them do that.

This was a far cry from what I ultimately hoped for, which was a system where we could point a camera at live actors and instantly have an animation of their action running on our game hardware. But it was a start.

All products recommended by Engadget are selected by our editorial team, independent of our parent company. Some of our stories include affiliate links. If you buy something through one of these links, we may earn an affiliate commission.